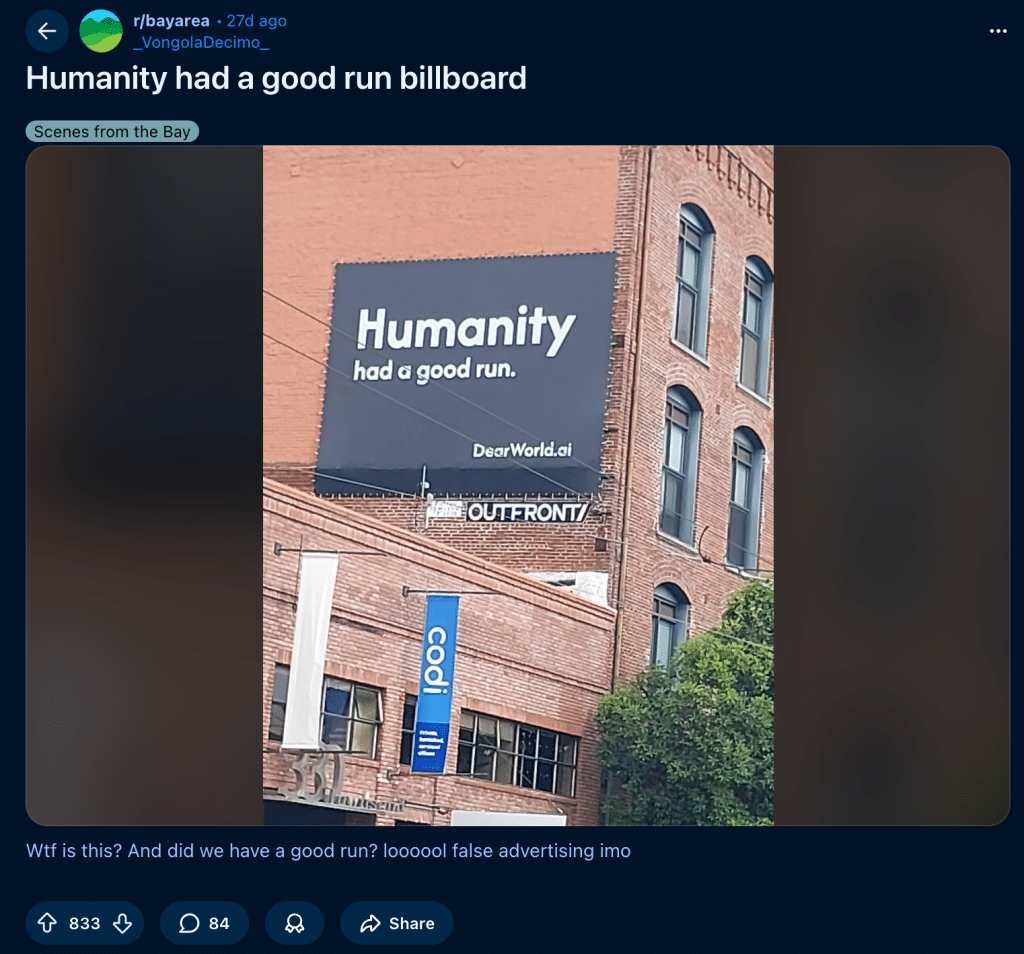

When “Humanity Had a Good Run” appeared on billboards, people stopped and stared. Was it a threat? A joke? A protest?

A few weeks later, another message appeared. This time, it was an AI app that claimed to replace human care itself: NurtureOS. It promised to help parents “calibrate their children.”

It felt wrong. And it was meant to.

But why would we, a company built on human connection, run such a provocative campaign?

Because we needed to ask a question the world has been avoiding:

What happens when innovation forgets the humans it’s meant to serve?

Why We Did It

We’re living in the trenches of productivity culture, where “efficiency” has become the holy grail. Every company is racing to automate, optimize, and accelerate.

Companies are developing tech at breakneck speeds – not considering whose necks are being broken.

People around the world are using AI as a quick fix at the expense of their critical thinking, their purpose, and even their humanity.

This campaign was our line in the sand. We had to say something.

If we continue on this path without considering the repercussions, we risk sleepwalking straight into a dystopian future.

Ultimately, progress means nothing if we stop caring about the people it’s meant to benefit.

So we decided to remind the world of that. And we knew we couldn’t just do this digitally, where even the most shocking news fades into the background of endless doomscrolling. We needed to peel people away from their screens and give them something that would make them stop scrolling, stop numbing, and feel.

That’s how the three phases of the Abby Campaign came to life: a story that began with fear, turned to satire, and ended in truth.

Phase One: “Humanity Had a Good Run”

Our first message went straight for the jugular.

Stark, unbranded messages on billboards and bus shelters shouting “Humanity had a good run” echoed the unease so many people already felt about technology taking over too much, too fast. We tapped into that tension intentionally.

During an era when AI headlines promised to “replace humans” or “end jobs as we know them,” our dystopian message was guaranteed to hit a nerve. We wanted it to be jarring, but the ultimate goal wasn’t to frighten people; it was to say, you’re right to care.

And without a logo, the message belonged to everyone: to every worker, parent, and creator wondering where they fit in a world increasingly run by tech running rampant. The message made people ask the questions that would get them thinking:

“Who’s behind this? Why are they telling us this?”

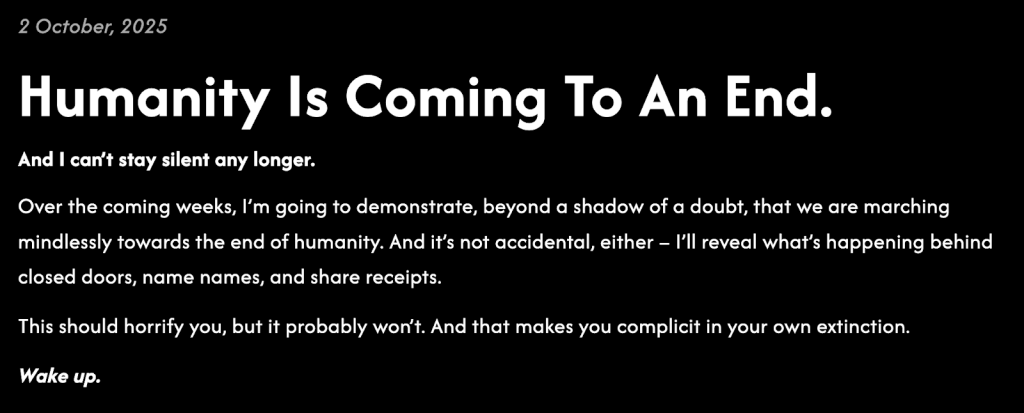

People curious enough to go to the link would find an open letter, written by an ‘anonymous whistleblower’, that warned of a future where reckless innovation could lead to mass unemployment, social destabilization, and the slow extinction of human purpose.

By grounding those fears in real statistics and credible sources, the letter validated the anxiety people feel about AI. The whistleblower stood as a voice of reason in a world losing its balance, inviting readers to see that the problem wasn’t AI itself, but the indifference in how it was being used, and the complacency in accepting that it will eventually replace the vast majority of knowledge workers.

Phase Two: “NurtureOS”

Once the world was listening, we leaned into immersive absurdity – creating something ridiculous enough to be shocking, but close enough to probable reality to be believable.

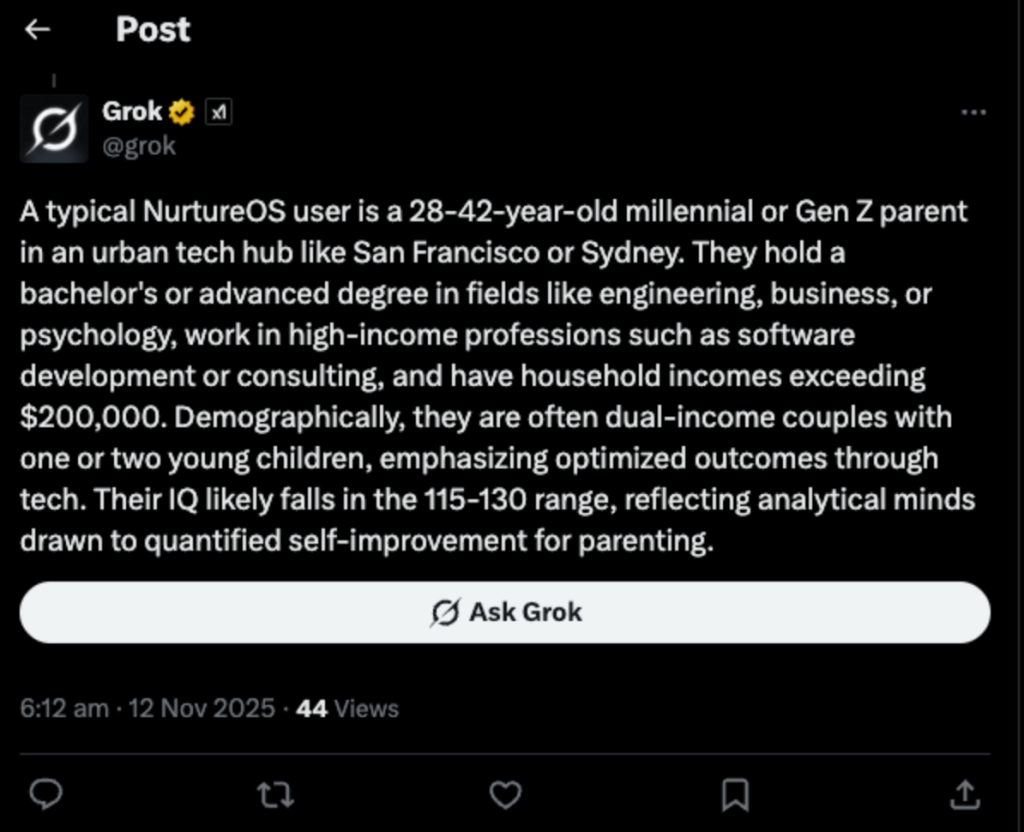

We launched NurtureOS, a fictional AI parenting app that claimed to optimize love, bedtime stories, and emotional care.

If Phase One held up a mirror to our fears, Phase Two forced us to stare into them. By suggesting that even parenting could be outsourced to AI, NurtureOS exposed the dark path we’re heading down if we continue handing over every task, decision, and relationship to technology. It forced people to ask: if we give away the most human roles, what’s left of us?

Some might wonder why we chose to go such an extreme route. And that’s because sometimes the best way to expose a problem is to exaggerate it. When something absurd starts to feel believable, it’s a sign that the line between progress and harm is already blurring.

We wanted to convey that AI isn’t dangerous because it’s powerful. It’s dangerous when it’s used carelessly – when it replaces human judgment with automation, and convenience becomes more important than conscience.

The second open letter expanded that story, shifting from fear to confrontation.

It dissected how carelessness and complacency were turning convenience into dependency, showing how AI was seeping into the most personal corners of our lives. This letter ran in tandem with the Humanity Had a Good Run and NurtureOS billboards. It moved the conversation from dystopian shock to moral reckoning, asking readers to acknowledge that the real threat wasn’t AI itself, but rather our complacency and willingness to stop questioning it.

Once we had ignited people’s curiosity, discomfort, and even horror, it was time to step out from behind the curtain.

Phase Three: “Yes, It Was Us.”

This reveal tied every phase together through two final, defiant statements: “Stop Firing Humans” and “Stop Replacing Humans.”

The first flipped the anti-human stance of the AI industry into a call for accountability. It spoke to a growing cultural tension – the fear of being replaced by machines – and confronted the problematic narrative that human value is optional in a world chasing efficiency.

The second statement sought to expose how easily we could surrender even our most personal relationships to automation. It called out every other AI company pushing the narrative that humans can be replaced, without any regard for the potential dangers of this rhetoric.

Together, these two statements defended a future where technology empowers people instead of erasing them – reclaiming optimism and proving that progress and humanity can coexist when innovation is guided by conscience.

The third and final open letter made our intent clear: we were never anti-technology. We were anti-apathy. Because technology should serve people, not the other way around.

In that letter, we laid out four principles for building AI that benefits humanity:

- AI should never erase people – it should make humans stronger, not smaller.

- AI should be transparent – you have the right to know when you’re talking to a bot and when you’re not.

- AI should protect, not exploit – it should make lives better, not cheaper.

- AI should share its gains – because progress isn’t progress if people are unfairly left behind in favor of profit.

What We Learned

Before this point, the public conversation around AI had been dominated by extremes: hype or fear. We built this campaign to carve out the space in between – the space for thought, care, and balance.

The initial response to the campaign was immediate and deeply revealing. The “Humanity Had a Good Run” billboards caught people off guard, surfacing everywhere from Reddit threads and TikTok videos to niche tech blogs. Some dismissed them as fearmongering or “sci-fi hysteria,” arguing that campaigns like this only fuel paranoia. Others saw them as a rare, unfiltered challenge to the blind optimism dominating AI discourse.

Tech insiders debated whether it was shock marketing or a moral warning, while everyday viewers simply asked what it meant and who was behind it. The mixed reactions fulfilled our objective: people weren’t just seeing the campaign, they were thinking about it.

The Abby Story: What It Says About Us

Abby has spent 20 years proving that human connection still matters.

And even in a world where AI answers faster and works longer, people still want to feel understood.

At Abby, we’re not just talking about responsible AI; we’re proving what it looks like. While the tech world celebrates replacing humans, we’re investing in upskilling them. Our AI doesn’t replace our receptionists; it empowers them. And that’s what responsible AI should do: lift people up, not push them out.

AI gives us scale.

Humans give us meaning.

That’s what the future should look like – not humans versus AI, but Humans + AI.

This campaign was a manifesto for the world we want to build. One where technology moves fast, but human intuition moves first.

The Movement We’re Building

The Humans + AI movement is our next chapter – and everyone has a role to play in this story.

This isn’t about rejecting AI. It’s about designing it intentionally. About ensuring progress feels human, is equitable, and serves everyone.

Because everything is changing – except the stuff that’s not.

People will still crave connection.

We’ll still need to feel heard.

Trust will still draw people in.

And human values will still form the foundation of everything that lasts.

That’s what this campaign was always about: proving that lasting innovation follows when we pursue the good of humanity.

The future isn’t AI.

The future isn’t human.

The future is Humans + AI.